Part B — Using API calls to enrich your data

This post is the second part out of 3. In the previous post (Part A — handling BeautifulSoup and avoiding blocks) we gave a brief introduction of web scraping and spoke about more advanced techniques on how to avoid being blocked by a website.

In this post, we show 2 examples of how to use API calls for data enrichment: Genderize.io and Aylien Text Analysis.

Using API for data enrichment

Genderize

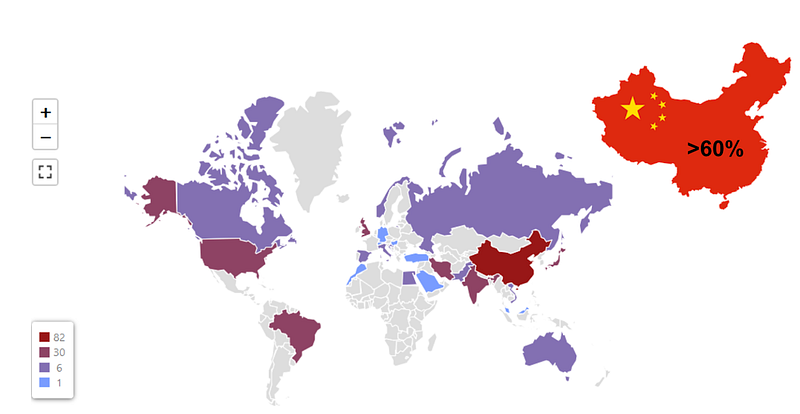

Genderize uses the first name of an individual to predict their gender (limited to male and female).

The output of this API is structured as JSON as seen in the example below:

{“name”:”peter”,”gender”:”male”,”probability”:”0.99″,”count”:796}

This makes it very convenient to enrich the author data with each one’s gender. Since the probability of the predicted gender is included, one can set a threshold to ensure better quality predictions (we set our threshold at 60% — see below for code snippets). The value this API brings is the ability to determine the gender distribution of authors for a specified topic.

We did not have to worry about the API limit (1000 calls/day) since we were only able to scrape around 120 articles/day which on average resulted in less than 500 authors per day. If one is able to exceed this daily limit, the API limit would have to be taken into account. One way of avoiding this daily limit would be to check if the first name being evaluated has already been enriched in our database. This would allow us to determine the gender based on the existing data without wasting an API call.

Some code snippets for the tech hungry:

Connecting genderize:

Author gender enrichment:

Aylien Text Analysis

We were interested in seeing the growth of keywords over time for a specified topic (think Google Trends) and therefore decided that we should enrich our data with more keywords. To do this, we used an API called Aylien Text Analysis, specifically the concept extraction API. This API allows one to input text which after processing outputs a list of keywords extracted from the text using NLP. Two of the various fields we scraped for each article were the Title and Abstract, these fields were concatenated and used as the input for the API. An example of the output JSON can be seen below:

{

“text”:”Apple was founded by Steve Jobs, Steve Wozniak and Ronald Wayne.”,

“language”:”en”,

“concepts”:{

“http://dbpedia.org/resource/Apple_Inc.":{

“surfaceForms”:[

{

“string”:”Apple”,

“score”:0.9994597361117074,

“offset”:0

}

],

“types”:[

“http://www.wikidata.org/entity/Q43229”,

“http://schema.org/Organization",

“http://dbpedia.org/ontology/Organisation",

“http://dbpedia.org/ontology/Company"

],

“support”:10626

}

}

}

In order to avoid duplicate keywords we checked that the keyword did not already exist in the keyword table of our database. In order to avoid adding too many keywords per article, two methods were instituted. The first was a simple keyword limit as seen in the code snippet below. The other made use of the score (probability of relevance) available in the output file for each keyword — This allows one to set a threshold (we used 80%) to ensure the most relevant keywords were added for each article.

An example of how the API works is seen in the figure below:

Below is a snippet of the code we used to connect to the Aylien Text API service:

Connect to aylien:

Enrich keywords using Aylien API:

Summary

In this post we showed how one can use API calls in order to enrich the data to extract further insights. In our next post we are going to talk about how to set up a database in order to store the data (after enrichment) and how to access this data for visualization. Link —

Sneak peek to Part C — Storing your data and visualization:

Find us on Linkedin: Shai Ardazi, Eitan Kassuto